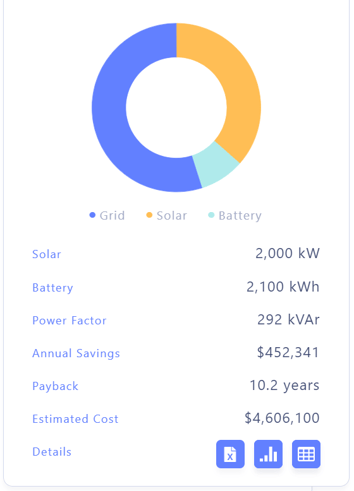

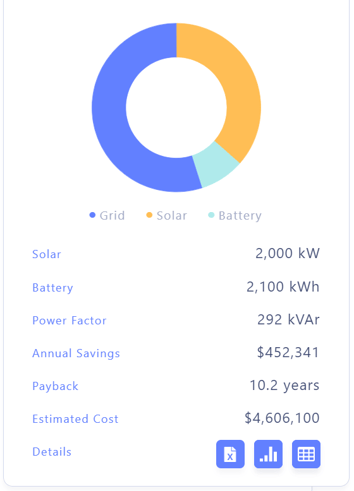

An important part of the Beam platform deals with running assessments to predict the benefits of solar and batteries to reduce costs and emissions.

There are three major parts:

- The power usage numbers from the past year

- the installable solar panel capacity and the expected Watt hours generated

- electricity fixed and time of use charges

With these inputs the platform is able to work out various combinations of solar and battery size to show the expected savings and payback period or operational cost from a PPA.

The Beam platform already made those assessments but with increasing numbers of combinations the assessment times started to creep up.

In the last couple of months we've reengineered our assessment calculations pipeline to improve the speed of calculations.

The first step was going back to first principles and pull apart the various aspects of the calculations and define the new flow.

Power usage numbers are an input that comes from various different formats of interval data that providers supply. So the first step is validating the interval data inputs, interpreting the columns for date, kW/kWh and kVA. Dates for the most part are noted without daylight savings adjustments but sometimes they are in local time.

Output from this phase is a standard internal format of interval data that is cached and in the right format for the subsequent calculations, basically an array of half-hourly values of Wh and VA.

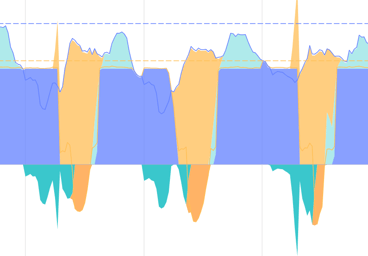

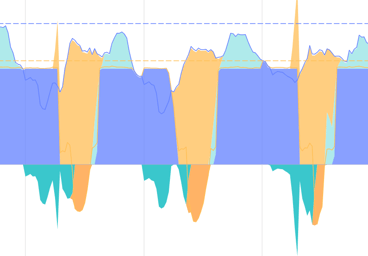

The new flow pre-calculates the total solar output from each considered panel configuration down to another the half-hourly array (the arrays of power usage and solar output are aligned on the same interval periods)

This model allows for efficient calculation of power usage after solar as it simply the difference of the two arrays, but also reduces the memory usage over our old approach of storing all interval data points in a key/value hashmap.

Running the battery simulation is the most intricate part of the calculations. There are a few different uses for batteries, like moving available solar power to non-sun hours or to expensive peak charge periods or to reduce the overall peak demand levels that incur extra network charges, but also to provide a (limited) amount of back-up power in case of a grid outage.

Combinations of these requirements, combined with the specific tariff structure for a site's load pattern means running the battery simulation quite a few times.

Our new implementation teases apart the decision on when to charge or discharge the battery from the actual (dis)charge calculation.

Another optimisation is in the peak demand lopping code. Peak lopping answers the

question of which reduction to current peak demand can be achieved with a combination of solar and battery.

The old approach calculated the results for a large number or possible thresholds before choosing the best one whereas the new approach starts with a value of 50% of current peak demand and iterates by bisecting the next percentage up when the threshold is met or down when the threshold is not achieved.

The final step is applying the charges to the simulated power usage and aggregating the half-hourly results into daily, monthly and yearly outputs.

To find the critical performance bottlenecks in the simulation, we make heavy use of profiling. Our codebase is written in Clojure and we've had great results using

clj-async-profiler (

https://github.com/clojure-goes-fast/clj-async-profiler) to produce the flame graphs that identify code hotspots. By repeatedly improving the slowest parts of the calculation and rerunning it with the profiler, it makes for an effective process of improving to a good-enough performance, based on real performance data.

Of course fast calculations are nice but we also need to verify their correctness.

Our approach is that a solar engineer sets up a model to predict some specific inputs and expected outputs in a spreadsheet and the software engineer can use that as the basis for automated unit tests to check the calculated predictions are in line with the model predictions.

This works well up to the point where modeling advanced battery strategies in a spreadsheet becomes quite cumbersome 😀

Our initial improvements have been rolled out into the Beam platform as we continue to make improvements to our range of assessment scenarios as well as including the latest updates to network tariff structures!

With these inputs the platform is able to work out various combinations of solar and battery size to show the expected savings and payback period or operational cost from a PPA.

With these inputs the platform is able to work out various combinations of solar and battery size to show the expected savings and payback period or operational cost from a PPA. question of which reduction to current peak demand can be achieved with a combination of solar and battery.

question of which reduction to current peak demand can be achieved with a combination of solar and battery.